|

Telecom Paris

Dep. Informatique & Réseaux  J-L. Dessalles

← Home pageMay 2023

J-L. Dessalles

← Home pageMay 2023 |

Cognitive Approach to Natural Language Processing (SD213)

➜ other AI courses

Processing Aspectual Relations

Introduction

"Last year, when the birds flew by, the alarm went off"

as either a single event or a repetitive event (the latter is the only interpretation in French with imperfect tense: L’année dernière, quand les oiseaux passaient, l’alarme se déclenchait.). You might even infer a causal link between the presence of the birds and the trigerring of the alarm. How can machines achieve this?The difficulty of the problem should neither be overestimated nor underestimated. The purpose of this lab is not to offer a definitive solution. It is rather to show that aspectual competence corresponds to an algorithm that is waiting to be reverse-engineered, and that this algorithm might not be that complex after all.Aspectual correctedness

|

|

The examples in French are stored in asp_Phrases.pl. Note that tense is noted using symbols such as _PP for present perfect or _PRET for preterite.

|

Indicate below the sentences (if any) which, according to you, are semantically odd.

(select them in the language in which you feel more comfortable) |

Aspectual switches

Viewpoint

| Considering self-similarity, would you associate the following verbs rather to figures or to grounds? |

Anchoring

- the market will collapse next week (= at some moment of next week)

- the market will collapse in one week (= it will take one week / after one week)

- the market will collapse in 2024 (= at some moment in 2024)

- the market will collapse in one year (= it will take one year / after one year)

Answer: the former is anchored in time, while the latter may be located anywhere.We introduce the aspectual switch anchoring to capture this difference. It may take two values:

- anc:1, for example "2024",

- anc:0, for example "one year".

The implicit interpretation of "in" as meaning "after" is only possible with an unanchored period.

| Using these criteria, decide which of the following expressions should be regarded as anchored (try to prefix each phrase with "She died during"). |

Occurrence

Duration

- She will marry in 2024.

- She will use her bike in 2024.

Aspectual operators

Zoom

| dp vwp:f occ:sing anc:0 dur:D | ➜ | vwp:g |

In the implementation, this operator applies only to determiner phrases (dp).

Repetition

- When Mary was building the wall, Peter was sick.

Quand Marie construisait le mur, Pierre était malade. - When Mary was building the wall, Peter was cooking.

Quand Marie construisait le mur, Pierre faisait la cuisine.

| vp/vpt vwp:f anc:0 occ:sing dur:D | ➜ | vwp:g occ:mult dur:min(D) |

Note that in the implementation, repetition affects only vp (verb phrases) or vpt (i.e. a verb phrase possibly followed by a prepositional phrase (vp [+ pp])). The mention dur:min(D) is used to force the repetition to last longer than the repeated event.

| Which examples among the following may reasonably receive a repetitive interpretation? |

Slicing

- She will marry in 2024

| pp vwp:f anc:1 occ:sing dur:D | ➜ | vwp:f anc:1 dur:max(D) |

In the implementation, only anchored temporal complements (pp) can be sliced. Note the use of max to indicate that a slice should last less longer than the container.

| Which examples among the following is likely to involve a slice? |

Inchoativity

- She will eat soup in two minutes and 30 seconds

Then, an admissible interpretation pops up: that she will start eating soup after two minutes and 30 seconds.

In the current implementation, this behaviour is generated by the slice operator. minutes, for instance, will correspond to two entries in the lexicon: the usual indication of duration, and another meaning which refer to "minutes from now". The latter is an anchored figure, while the former is unanchored.

Predication

Consider the sentences:

- she will !(snore) during the show

- she will !(snore for ten minutes)

In 2, prediction concerns the whole phrase "snore for ten minutes". In this case, it is rather the duration that must be seen as unexpected/wished/feared.One effect of predication is to convert events into non-durative figures (f). If we say about a vegetarian person:

- she ate meat in 2021

| vp/vpt anc=0 dur:D | ➜ | vwp:f anc:1 dur:nil(D) |

In the implementation, predication affects only vp (verb phrases) or vpt (i.e. a verb phrase possibly followed by a prepositional phrase (vp [+ pp])).

In the sentence:

|

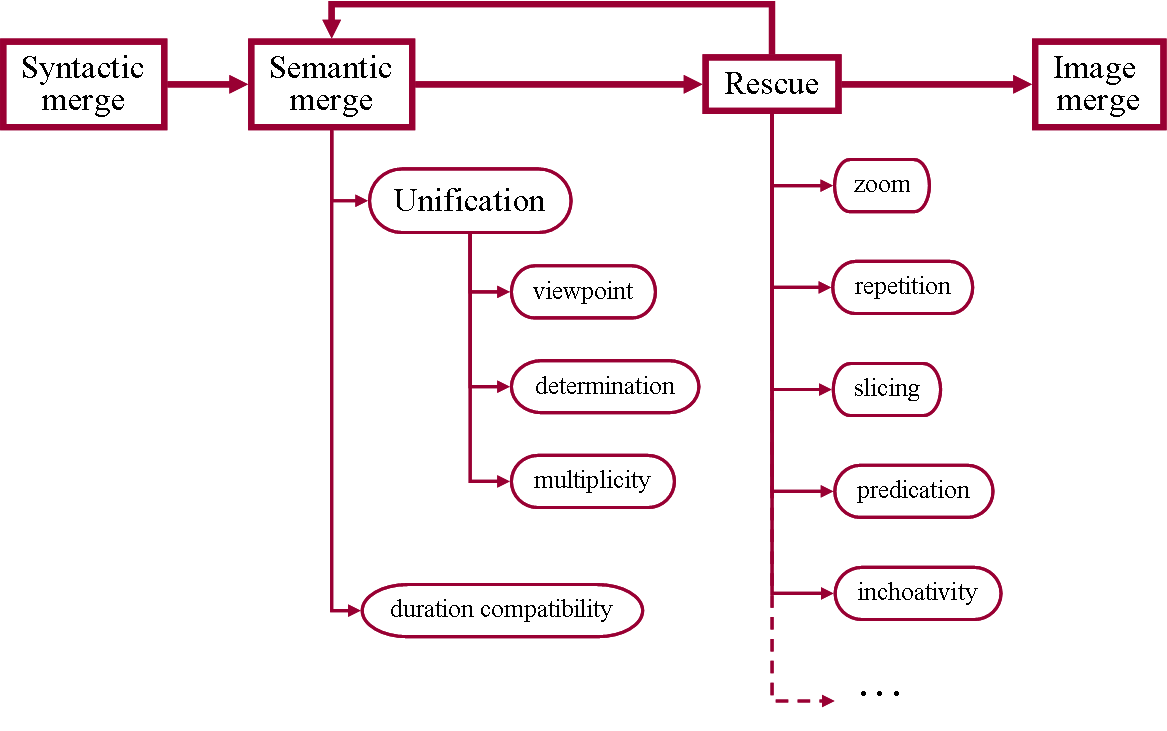

Processing Aspect

Discovering lexical structures

lexicon(eat, vp, [vwp:f, im:eat_meal, dur:3.5]).Here, vp means that the verb can be seen as a verb phrase on its own and does not expect any complement.The feature vwp means viewpoint. Its value may be f (figure) or g (ground).The feature im stands for ‘image’. It would ideally refer to some perceptive representation of the scene. Here, it will just consist of a nested textual labels. The feature dur represents typical duration (when applicable) as a 10-base logarithm in second units. For instance, the above duration noted 3.5 represents 103.5 = 3162 sec. ≈ 53 min.

|

Introduce new lexical entries for the verb ‘draw’, as it is used in "draw a circle".

Don’t forget to indicate duration, viewpoint and image. |

|

Introduce another lexical entries for the verb ‘draw’, meant as an activity (without complement).

Don’t forget to indicate duration, viewpoint and image. |

Discovering the grammar

vpt --> vp, pp.This rule means that a vpt is a verb phrase vp followed by a prepositional phrase pp.

Note that the grammar is binary (no more than two items on the right-hand side of rules). This is meant to represent the action of the syntactic merge operation.

Note also the use of ip (inflection phrase), of tp (tense phrase) and of dp (determiner phrase), in accordance with modern linguistics.

Semantic merge

Consider for instance the prepositional phrase in ten minutes.

The preposition in corresponds to the feature structure [vwp:f].

The phrase ten minutes corresponds to the feature structure [vwp:f, anc:0, im:10_minutes, dur:2.8].

You can see that both structures match by executing the Prolog program asp_Main.pl or the Python program asp_Main.py.

|

Note: The Prolog version is currently disabled.

Only the Python version is up to date. |

In prolog:

?- test(pp).

---> in ten minutes

The sentence is correct [pp([vwp:f, anc:0, im:10(minute),dur:2])] To execute the Python version, you need:

- asp_Main.py (main program with parser)

- asp_Grammar.py (interface with Prolog grammar)

- syn_Grammar.py (interface with Prolog grammar)

- asp_Lexicon.py (this is where aspect is processed)

- syn_util.py

- asp_Lexicon.pl

- asp_Grammar.pl

- and optionally

In Python:

python asp_Main.py

Input a sentence > [press Enter]

or maybe a phrase > in ten minutes

__ pp: (vwp:f, anc:0, dur:2.8)

in_10_minutes

__ pp: (vwp:f, anc:1, dur:2.8)

in_10_minutes_from_now

Viewpoint, anchoring and multiplicity are merged based on identity through unification. Duration is merged by checking duration compatibility.

Semantic merge is not limited to mere feature matching. The "intelligent" part of aspectual processing lies in the set of aspectual operators (repeat, slice, predication...). These operators are implemented as "rescue" operations. In Prolog, the rescue procedure is found in asp_Merge.pl. It is called repeatedly at each backtrack.

In Python, the rescue procedure is in asp_Lexicon.py as a method in the class WordEntry.

The point of rescue is to transform the aspectual representation of the current frame through slicing, repetition or predication when applicable. Note that the applicability of operators depends on the syntactic category (e.g. only pp can be sliced).

Playing with the program

To run the program on French sentences, open asp_Main.pl and comment/uncomment the two first lines to change the language.

In Python, comment/uncomment two lines in the main procedure of asp_Main.py.

This will load asp_Lexique.pl instead of asp_Lexicon.pl and asp_Phrases.pl instead of asp_Sentences.pl.

In Python, comment/uncomment two lines in the main procedure of asp_Main.py.

This will load asp_Lexique.pl instead of asp_Lexicon.pl and asp_Phrases.pl instead of asp_Sentences.pl.

At this point, the program correctly interprets some sentences. The interpretation of examples is given as a kind of paraphrase. For instance, in Prolog:

?- test.

Sentence ---> Mary will drink a glass_of_wine

== Ok. f.d [occ:sing, dur:0.9] ---> in the future Mary ingest this glass_of_wineand in Python (no paraphrase in Python):

__ s: (vwp:f, anc:1, occ:sing, dur:0.9)

ingest(Mary,1_glass_of_wine) loc(+)Now test drink a glass_of_wine in ten seconds.

In Prolog, you have to call:

?- test(vpt).

Sentence ---> drink the glass_of_wine in ten seconds and you have to press ; (semicolon) after true to get all interpretations.

In Python:

python asp_Main.py

Input a sentence > [press Enter]

or maybe a phrase > drink a glass_of_wine in ten seconds

|

You should get two interpretations for drink a glass_of_wine in ten seconds.

Which aspectual operator is involved in this difference of interpretation? |

|

Consider the sentence (in Prolog, simply type test. to test a sentence):

Mary _PRES drink a glass_of_wine You should get only one interpretation. Which aspectual operator did apply here? |

Why does this sentence get rejected? If we try the following:

python asp_Main.py

Input a sentence > [press Enter]

or maybe a phrase > drink water for ten seconds

__ vpt: (vwp:g, anc:0, dur:0.9)

ingest(_,water) dur(for_10_seconds)(?- test(vpt).) (in Python, just type-in the phrase).

Phrase of type vpt ---> drink water during one minute

== Ok. g.u [occ:sing,dur:0.9] ---> drink_some zoom 1 minute waterwe get a correct interpretation of the phrase as a ground (g).

If we look at the definition of will in Lexicon.pl, we can see that it corresponds to a figure f:

lexicon(will, t, [anc:_, vwp:f, im:+]).This explains the mismatch.

Yet, we feel that Mary will drink water for ten seconds should receive some kind of interpretation. If you think about it, you will observe that it is only true if "drink water for ten seconds" can be regarded as unexpected/wished/feared (e.g. if it is a feat / if Mary does not drink enough / if there is not enough water for all). In other words, the sentence is acceptable is "drink water for ten seconds" translates into a predicate that receives an attitude. As such it loses its temporality.Open asp_Merge.pl and uncomment the call to predication in the rescue0 clause.

In Python, augment the list of operators as asked in WordEntry.rescue in asp_Lexicon.py.

Now, the program is able to assign an attitude to vpt through the predication operation. Predication is indicated with an exclamation point !... in the output. Now we get several interpretations for the phrase drink water for ten seconds, including

__ vpt: (vwp:g, anc:0, dur:0.9)

ingest(_,water) dur(for_10_seconds)

__ vpt: (vwp:f, anc:0, dur:nil(0.9))

!ingest(_,water) dur(for_10_seconds)

| Test the sentence "Mary will drink water for ten seconds" and verify that it now receives some interpretations. Why is it so? |

|

Try the sentence Mary will drink water in 2028.

It is rejected. Why? |

|

Why is the sentence Mary will drink water in 2028 now accepted?

(you may analyze the pp phrase in 2028 and see that there is now a new alternative ) To answer this question, you may have to locate the compare procedure in the Duration class to see how a Nil duration (due to predication) compares with a max duration (due to slicing). |

Going further

- add self.predication_eternal in the list of rescue operations

- enable the test isPredicate as suggested in semantic_merge

- (optional) set MAXPREDICATION to 0 to allow any number of predications (in fact, 2 max) on top of each other

python asp_Main.py asp_Sentences.pl Output.txt 1

and reading the content of Output.txt (the trailing 1 is to set the trace level to 1).You may then try to introduce new lexical words (as you did with ‘draw') and new aspectual words such as ‘after’, try sentences with imperfect tense, explore sentences in French, implement lexicon and grammar from another language, and so on. Note that you can adapt the trace level to see more detail.

Suggestion

Bibliography

- Dölling, J. (2014). Aspectual coercion and eventuality structure. In K. Robering (Ed.), Events, arguments, and aspects: Topics in the semantics of verbs, 189-226. John Benjamins Publishing Company.

- Munch, D. & Dessalles, J.-L. (2014). Assessing Parsimony in Models of Aspect. In P. Bello, M. Guarini, M. McShane & B. Scassellati (Eds.), Proceedings of the 36th Annual Conference of the Cognitive Science Society, 2121-2126. Austin, TX: Cognitive Science Society.

- Pulman, S. G. (1997). Aspectual shift as type coercion. Transactions of the Philological Society, 95 (2), 279-317.

Back to main page

Back to main page