|

Telecom Paris

Dep. Informatique & Réseaux  J-L. Dessalles

← Home pageMay 2023

J-L. Dessalles

← Home pageMay 2023 |

Cognitive Approach to Natural Language Processing (SD213)

➜ other AI courses

Content

Argumentation & Deliberative reasoning

Foreword

CAN (Conflict-Abduction-Negation) is a minimal model of the cognitive capabilities underlying argumentation and deliberative reasoning. To illustrate the method, we will use a dialogue adapted from a real conversation (see below). Any other dialogue could, in principle, be processed through the same procedure, just by changing the mini-knowledge base fed into the program.

You will have the opportunity to observe:

- the structure of a knowledge base,

- the notion of necessity, which subsumes desires and beliefs

- the deliberative procedure (C-A-N), which generates relevant arguments.

The dialogue

English version |

Original French version |

|

[Context: A is repainting doors. He decided to remove the old paint first, which proves to be a hard work] A1- I have to repaint my doors. I've burned off the old paint. It worked OK, but not every-where. It's really tough work! [...] In the corners, all this, the mouldings, it's not feasible! [...] B1- You have to use a wire brush. A2- Yes, but that wrecks the wood. B2- It wrecks the wood... [pause 5 seconds] A3- It's crazy! It's more trouble than buying a new door. B3- Oh, that's why you'd do better just sanding and repainting over. A4- Yes, but if we are the fifteenth ones to think of that! B4- Oh, yeah... A5- There are already three layers of paint. B5- If the old remaining paint sticks well, you can fill in the peeled spots with filler compound. A6- Yeah, but the surface won't look great. It'll look like an old door. |

[Contexte: A repeint ses portes. Il a décidé de décaper l'ancienne peinture, ce qui se révèle pénible.] A1- Ben moi, j'en bave actuellement parce qu'il faut que je refasse mes portes, la peinture. Alors j'ai décapé à la chaleur. Ca part bien. Mais pas partout. C'est un travail dingue, hein? B- heu, tu as essayé de. Tu as décapé tes portes? A1a- Ouais, ça part très bien à la chaleur, mais dans les coins, tout ça, les moulures, c'est infaisable. [plus fort] Les moulures. B - Quelle chaleur? La lampe à souder? A- Ouais, avec un truc spécial. B1- Faut une brosse, dure, une brosse métallique. A2- Oui, mais j'attaque le bois. B2- T'attaques le bois. A3- [pause 5 secondes] Enfin je sais pas. C'est un boulot dingue, hein? C'est plus de boulot que de racheter une porte, hein? B3- Oh, c'est pour ça qu'il vaut mieux laiss... il vaut mieux simplement poncer, repeindre par dessus A4- Ben oui, mais si on est les quinzièmes à se dire ça B4- Ah oui. A5- Y a déjà trois couches de peinture, hein, dessus. B5- Remarque, si elle tient bien, la peinture, là où elle est écaillée, on peut enduire. De l'enduit à l'eau, ou A6- Oui, mais l'état de surface est pas joli, quoi, ça fait laque, tu sais, ça fait vieille porte. |

|

|

A1- repaint, burn-off, moldings, tough work B1- wire brush A2- wood wrecked A3- tough work B3- sanding A5- several layers B5- filler compound A6- not nice surface |

This is an argumentative discussion. Discussions radically differ from narratives. They consist in characteristic alternations between problems and solutions.

| Problem: | doors are not nice | |

| Solution: | repaint | |

| Problem: | ancient layers | |

| Solution: | burn-off | |

| Problem: | tough work due to mouldings | |

| Solution: | wire brush | |

| Problem: | wood wrecked | |

| Solution: | no wire brush | |

| Problem: |

tough work due to mouldings

(Here, one have to assume that the ‘tough work’ problem intensity increases)) |

|

| Solution: | no burn-off | |

| Problem: | doors are not nice | |

| Solution: | sanding | |

| Problem: | there are several layers | |

| Solution: | filler compound |

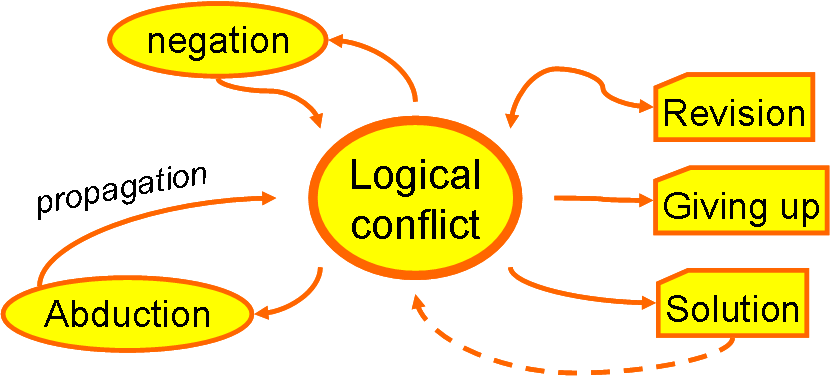

Conflicting necessities

As far as the computation of logical relevance is concerned,

the distinction between beliefs and desires can be ignored.

Beliefs and desires are subsumed by the notion of necessity.

To describe the argumentation procedure, we use a single notion, called necessity, that captures the intensity with which a given situation is believed or wished. Necessities are negative in case of disbelief or avoidance. At each step t of the reasoning procedure, a function νt(T) is supposed to provide the necessity of any predicate T on demand (we will omit subscripts t to improve readability). The necessity of T may be unknown at time t. We suppose that necessities are consistent with negation: ν(¬T) = -ν(T). The main purpose of considering necessities is that they propagate through logical and causal links, as we will see.We say that a predicate is realized if it is regarded as being true in the current state of the world. A predicate T is said to be conflicting if:

the distinction between beliefs and desires can be ignored.

Beliefs and desires are subsumed by the notion of necessity.

- ν(T) > 0 when it is not realized, or if

- ν(T) < 0 in a situation in which it is realized.

The C-A-N procedure

| Conflict: | If there is no current conflict, look for a new conflict (T, N) where T is a recently visited state of affairs. |

| Solution: | If N > 0 and T is possible (i.e. ¬T is not realized), decide that T is the case (if T is an action, do it or simulate it). |

| Abduction: | Look for a cause C of T or a reason C for T. If C is mutable with intensity N, make v(C) = N and restart from the new conflict (C, N). |

| Negation: | Restart the procedure with the conflict (¬T, -N). |

| Give up: | Make v(T) = -N. |

| Revision: | Reconsider the value of v(T). |

The program

| A version of the program used in this lab has been written in Python. It is available from there, (together with Prolog files). |

| A short description of the Python program is (or will be) available from there. |

The Python implementation consists of five files:

|

Structure

| rel_knowl.pl | loads the domain knowledge |

| rel_doors.pl | domain knowledge |

| rel_util_full.pl

rel_util.pl |

displays windows showing progress in reasoning

replaces rel_util_full.pl when graphic display is not available |

| rel_world.pl | world processing. |

| rel_CAN.pl | main program; contains the C-A-N procedure. |

(all files, both in Prolog and in Python, are stored together there).Run the program by executing rel_CAN.

- Prolog: type ‘go.’

If you want to see how knowledge evolves through time, modify rel_CAN.pl to load rel_util_full.pl instead of rel_util.pl at the beginning of rel_CAN.pl (this will only work if your SWI-Prolog is compatible). - Python: you may change the trace level at the end of rel_CAN.py.

?- go.

Conflict of intensity -20 with nice_doors

------> Decision : repaint

Conflict of intensity 20 with nice_doors

------> Decision : burn_off

Conflict of intensity -10 with tough_work

Conflict of intensity -10 with tough_work

------> Decision : wire_brush

Conflict of intensity 20 with nice_doors

------> Decision : -wire_brush

Conflict of intensity -10 with tough_work

Conflict of intensity -10 with tough_work

We are about to live with tough_work ( -10 )!

If you want to change preference for tough_work ( -10 ), enter number followed by '.' (or else: 'n.')

|: -22.

Conflict of intensity -22 with tough_work

------> Decision : -burn_off

Conflict of intensity 20 with nice_doors

------> Decision : -wood_wrecked

Conflict of intensity 20 with nice_doors

------> Decision : sanding

Conflict of intensity 20 with nice_doors

Conflict of intensity 20 with nice_doors

------> Decision : filler_compoundFor now, the output is much shorter:

?- go.

Conflict of intensity -20 with nice_doors

------> Decision : repaint

Conflict of intensity 20 with nice_doors

------> Decision : burn_off

Conflict of intensity 20 with nice_doors

------> Decision : -wood_wrecked

true.We can change trace level to level 3 by typing: tl(3). We have to press some key periodically to proceed (press ‘q’ to interrupt).

2 ?- tl(3).

true.

3 ?- go.

(Re)start...

Conflict of intensity -20 with nice_doors

Negating -nice_doors , considering nice_doors

|: repaint is revisable because it is an action with no prerequisite

------> Decision : repaint

|: (Re)start...

Conflict of intensity 20 with nice_doors

burn_off is revisable because it is an action with no prerequisite

------> Decision : burn_off

|: (Re)start...

Conflict of intensity 20 with nice_doors

-wood_wrecked is revisable because its status is unknown

------> Decision : -wood_wrecked

|: (Re)start...

true.Trace level 5 reveals the functioning of the C-A-N procedure in minute details. Observe the level 5 trace on the beginning of the dialogue (you may interrupt by pressing ‘q').You may identify the recurring occurrence of the three main phases: conflict, abduction, negation.

Knowledge representation

tough_work <=== burn_off + mouldings + -wire_brush.This rule says that tough_work causally results from burn_off when there are mouldings and one does not use a wire_brush. Knowledge includes these types of items.

- causal rules, as the one above. (the program can also deal with incompatibility rules, not used for this dialogue)

- default rules, such as: default(-soft_wood, _). This rule says that by default, the wood is not soft.

- preference rules, such as: preference(tough_work, -10). This rule says that tough_work should be avoided with intensity 10.

Then it makes a strange "decision", which is that the wood is not wrecked. Why? The reason is that it has no information about whether the wood is wrecked or not, and so wood_wrecked is considered mutable.

| Explain why abduction comes to considering wood_wrecked and which rule (in rel_doors.pl) is responsible. |

The purpose of a premise like -wood_wrecked is to capture with a static rule what would be an unanticipated exception in real life. There could be a myriad of possible such exceptions. We do not want our rule-based abductive system to consider them all in sequence.

|

Use the default predicate in rel_doors.pl to make this exception invisible to abduction. Default facts are considered true as long as they are not conflicting with other facts. Copy the new rule below.

Verify that the "decision" about wood_wrecked has disappeared. |

Modifying knowledge

?- state(mouldings).

fact mouldings added to the world

true.

?- go.

Conflict of intensity -20 with nice_doors

------> Decision : repaint

Conflict of intensity 20 with nice_doors

------> Decision : burn_off

Conflict of intensity -10 with tough_work

Conflict of intensity -10 with tough_work

------> Decision : wire_brush

true.Ok, we are one step further. What about the softness of the wood? Add it by typing state(soft_wood). and again go. (in Python, add soft_wood as an initial_situation). It does not change anything.

|

Add a domain rule to rel_doors.pl that leads to wood_wrecked when we use a wire brush and the wood is soft.

Copy the line below. Verify that the program is able to abandon the idea of using a wire brush! |

| Enter a value that is more problematic than the intensity of nice_doors, e.g -22. What happens? Can you iterate the processus by choosing ever increasing insatisfaction? |

Note that knowledge entered through state(_) is permanent, but that it is lost if you quit SWI-Prolog. You may replace it by adding initial_situation(_) declarations in rel_doors.pl.

|

Uncomment the two commented causal rules in rel_doors.pl. Reload the program.

As in the real dialogue, the program suggests sanding.

Now state that there are several layers: state(several_layers.) and then go. What happens? |

Determinism

| Move the clause mentioning filler_compound to the first position among causal clauses. What happens? Does it change the dialogue significantly? |

| In the real dialogue, A is considering another action, which is to buy a new door (A3). Explain why this action is not considered any further. Does this fit with the CAN procedure? |

Monotony.

| Explain in what way the program is non-monotonous. |

Suggestion

- a dialog on covid-19, vaccine and the like, or on AI, ChatGPT and the like.

- consider the discussion on drugs during pregnancy, as discuss in this podcast by The Gardian.

Back to main page

Back to main page